RHCA - EX180

Red Hat Certified Specialist in Containers and Kubernetes

Podman host setup

dnf module install container-toolsdnf install -y buildahPodman basics

Registry file: /etc/containers/registries.conf

Login to a registry

podman login registry.access.redhat.comSearch for images

podman search mariadbInspect images without downloading

skopeo inspect docker://registry.access.redhat.com/rhscl/mariadb-102-rhel7Download images

podman pull registry.access.redhat.com/rhscl/mariadb-102-rhel7List images

podman imagesInspect images. *Useful for locating config.user

podman inspect registry.access.redhat.com/rhscl/mariadb-102-rhel7:latestConfigure container volume path

sudo mkdir /srv/mariadb

sudo chown -R 27:27 /srv/mariadb # UID found in podman inspect

sudo semanage fcontext -m -t container_file_t "/srv/mariadb(/.*)"

sudo restorecon -Rv /srv/mariadbRun image

-d detached

-e per variable

-p local_port:container_port

-v local/path:/path/in/pod

podman run -d -e MYSQL_USER=user \

-e MYSQL_PASSWORD=pass -e MYSQL_DATABASE=db \

-p 33306:3306 rhscl/mariadb-102-rhel7 \

-v /srv/mariadb:/var/lib/mysql:Z # :Z isn't needed if SELinux manually configuredBasic pod status and names

podman psEnter container in interactive shell

podman exec -it container-name /bin/bashCommit changes to running image

podman commit container-name image-nameExport image

podman save image-name > /path/to/image.tarRemove images

podman rmi image-name --forceRestore/Load image

podman load -i /path/to/image.tarDockerfile Basics

Container for scanning a network.

# Start with a base image

FROM registry.access.redhat.com/ubi8

# Maintainer information

MAINTAINER ClusterApps <mail@clusterapps.com>

Run commands to build the container

Do as much with the fewest RUN lines

RUN yum --assumeyes update &&

yum --assumeyes install

nmap iproute procps-ng &&

bash &&

yum clean all

Entrypoint is the command that run when the ccontainer starts

ENTRYPOINT ["/usr/bin/nmap"]

The arguments for the entrypoint

CMD ["-sn", "192.168.252.0/24"]

Build the image with a tag

podman build -t notes180 .List images

podman imagesRun the image

podman run localhost/notes180OpenShift Basics

Setup oc completion

source <(oc completion bash)Create a new project

oc new-project nginxCreate a new application based on an image

oc new-app bitnami/nginxBasic information about the project

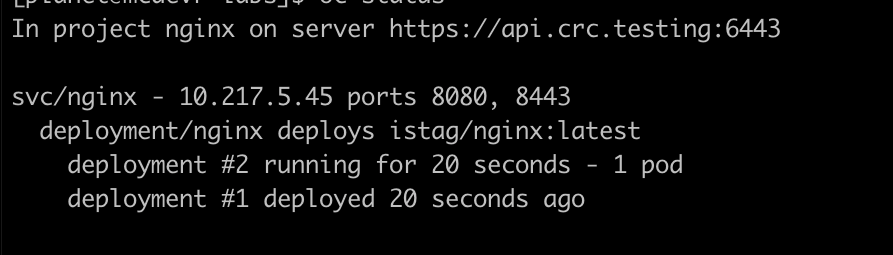

oc status

Get more detailed information about the project

oc get allGet full details about a pod

oc describe pod/nginx-84654f9574-9gpntGet full details about a deployment

oc describe deployment.apps/nginxApplication deployments

Generate a YAML file to use as a base.

oc create deployment nginx --image=bitnami/nginx --dry-run=client -o yaml > newapp.ymlCreate a temporary deployment using the YAML file,

oc create -f newapp.ymlCreate the service by exposing the deployment

# dry run to add to YAML file

oc expose deployment --port=8080 --dry-run=client nginx -o yaml >> newapp.yml

# run to create the service

oc expose deployment --port=8080 nginxExpose the service

oc expose svc nginx --dry-run=client -o yaml >> newapp.ymlNew app with parameters, as a deployment-config, and with labels (app=database)

oc new-app --name mdb -l app=database -e MYSQL_USER=dbuser -e MYSQL_PASSWORD=SuperAwesomePassword -e MYSQL_DATABASE=dbname bitnami/mysql --as-deployment-config

Delete the temporary application

oc delete all --allEdit the YAML file and break up the sections into proper YAML.

Add --- at the beginning of a section

Add ... at the end of a section.

The new nginx app YAML file.

---

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: bitnami/nginx

name: nginx

resources: {}

status: {}

...

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: nginx

name: nginx

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

selector:

app: nginx

status:

loadBalancer: {}

...

apiVersion: route.openshift.io/v1

kind: Route

metadata:

creationTimestamp: null

labels:

app: nginx

name: nginx

spec:

port:

targetPort: 8080

to:

kind: ""

name: nginx

weight: null

status: {}

...

Delete API resources defined by a YAML file.

oc delete -f newapp.ymlUsing Templates

Get a list of templates

oc get templates -n openshiftGet template details

Review the parameters section for a list of environment variables.

oc describe template -n openshift mariadb-persistentCreate a new application from a template

oc new-app --template=mariadb-persistent \

-p MYSQL_USER=jack -p MYSQL_PASSWORD=password \

-p MYSQL_DATABASE=jackCheck the status of the deployment.

oc get all

oc describe pod/mariadb-1-qlvrjSource 2 Image

Gety a list of templates and streams

oc new-app -LDeploy a new app from a git repo

oc new-app php~https://github.com/clusterapps/simpleapp.gitWatch the app get built

oc logs -f buildconfig/simpleappReview the deployment with oc get all

NAME READY STATUS RESTARTS AGE

pod/mariadb-1-deploy 0/1 Completed 0 16m

pod/mariadb-1-qlvrj 1/1 Running 0 16m

pod/simpleapp-1-build 0/1 Completed 0 2m10s

pod/simpleapp-fb5554fd9-4kgnb 1/1 Running 0 89s

NAME DESIRED CURRENT READY AGE

replicationcontroller/mariadb-1 1 1 1 16m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mariadb ClusterIP 10.217.5.246 <none> 3306/TCP 16m

service/simpleapp ClusterIP 10.217.4.16 <none> 8080/TCP,8443/TCP 2m11s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/simpleapp 1/1 1 1 2m11s

NAME DESIRED CURRENT READY AGE

replicaset.apps/simpleapp-75c686cbb8 0 0 0 2m11s

replicaset.apps/simpleapp-fb5554fd9 1 1 1 89s

NAME REVISION DESIRED CURRENT TRIGGERED BY

deploymentconfig.apps.openshift.io/mariadb 1 1 1 config,image(mariadb:10.3-el8)

NAME TYPE FROM LATEST

buildconfig.build.openshift.io/simpleapp Source Git 1

NAME TYPE FROM STATUS STARTED DURATION

build.build.openshift.io/simpleapp-1 Source Git@26e2f16 Complete 2 minutes ago 41s

NAME IMAGE REPOSITORY TAGS UPDATED

imagestream.image.openshift.io/simpleapp default-route-openshift-image-registry.apps-crc.testing/nginx2/simpleapp latest About a minute ago